英伟达在2023年台北电脑展上发布了多项重磅消息,其中最引人注目的是其Grace Hopper超级芯片已经全面投产,以及基于该芯片的DGX GH200超级计算机。DGX GH200是一台专为大规模生成式AI工作负载而构建的超级计算平台,它集成了256个GH200芯片,拥有144TB的共享内存,性能达到了1 exaFLOPS。本文将介绍这些产品的技术特点和优势,以及它们对AI领域的影响。

GH200超级芯片

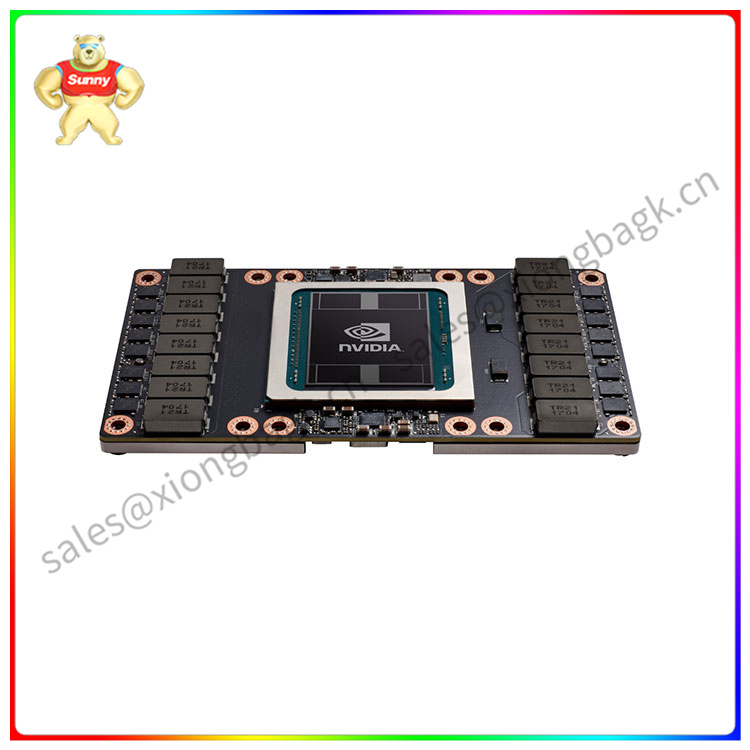

GH200是英伟达最新的超级芯片,它集成了Grace CPU和H100 GPU,拥有2000亿个晶体管。Grace CPU是英伟达自己的基于Arm架构的高性能处理器,H100 GPU是英伟达的下一代张量核心GPU,支持FP8、FP16、BF16、INT8、INT4等多种数据格式。GH200超级芯片使用NVIDIA NVLink-C2C芯片互连,将基于Arm的NVIDIA Grace CPU与NVIDIA H100 Tensor Core GPU整合在了一起,以提供CPU+GPU一致性内存模型,从而不再需要传统的CPU至GPU PCIe连接。

GH200超级芯片在CPU和GPU之间提供了惊人的数据带宽,CPU和GPU之间的吞吐量高达1TB/s,为某些内存受限的工作负载提供了巨大的优势。GH200超级芯片还拥有96GB的HBM3内存和512GB的LPDDR5X内存,为大规模AI模型提供了充足的内存空间。

GH200超级芯片还针对Transformer计算进行了优化,Transformer算子是BERT到GPT-4等大模型的基础,且越来越多地应用于计算机视觉、蛋白质结构预测等不同领域。GH200中新的Transformer引擎与Hopper FP8张量核心相结合,在大型NLP模型上提供比高达9倍的AI训练速度和30倍的AI推理速度(与A100服务器相比)。新的Transformer引擎动态调整数据格式以充分运用算力,H100 FP16 Tensor Core 的吞吐量是 A100 FP16 Tensor Core 的 3 倍。

Nvidia made a number of big announcements at Computex 2023, most notably that its Grace Hopper superchip has gone into full production, and the DGX GH200 supercomputer based on that chip. DGX GH200 is a supercomputing platform built for large-scale generative AI workloads that integrates 256 GH200 chips, 144TB of shared memory, and one exaFLOPS of performance. This article will describe the technical features and advantages of these products, as well as their impact on the AI field.

GH200 superchip

The GH200 is Nvidia's latest superchip, which integrates a Grace CPU with an H100 GPU and has 200 billion transistors. The Grace CPU is Nvidia's own high-performance processor based on the Arm architecture, and the H100 GPU is Nvidia's next generation tensor core GPU that supports multiple data formats such as FP8, FP16, BF16, INT8, INT4, and more. The GH200 superchip uses NVIDIA NVLink-C2C chip interconnects to integrate ARM-based NVIDIA Grace cpus with NVIDIA H100 Tensor Core Gpus to provide a CPU+GPU consistent memory model. This eliminates the need for traditional CPU-to-GPU PCIe connections.

The GH200 superchip provides amazing data bandwidth between CPU and GPU, with throughput of up to 1TB/s between CPU and GPU, providing a huge advantage for certain memory-constrained workloads. The GH200 superchip also has 96GB of HBM3 memory and 512GB of LPDDR5X memory, providing ample memory space for large-scale AI models.

The GH200 superchip is also optimized for the calculation of Transformer, the operator that underlies large models from BERT to GPT-4 and is increasingly used in fields as diverse as computer vision and protein structure prediction. The new Transformer engine in the GH200, combined with the Hopper FP8 tensor core, delivers up to 9 times faster AI training and 30 times faster AI reasoning on large NLP models (compared to the A100 server). The new Transformer engine dynamically adjusts data formats to maximize computing power, and the H100 FP16 Tensor Core delivers three times the throughput of the A100 FP16 Tensor Core.